6.7 | Dataflows

6.7.1 | Overview

One of the most important and valuable components of the Power BI Online Service are dataflows. Essentially, with a dataflow we can use Power Query directly within the browser and connect with one or many data sources and prepare and load the data into the desired output tables. With that, a dataflow stores one or many data tables in the Online Service, ready to be used by subsequent applications which in most cases will be one or many Power BI datasets.

A dataflow can be scheduled to automatically run at certain times and refresh the output tables accordingly.

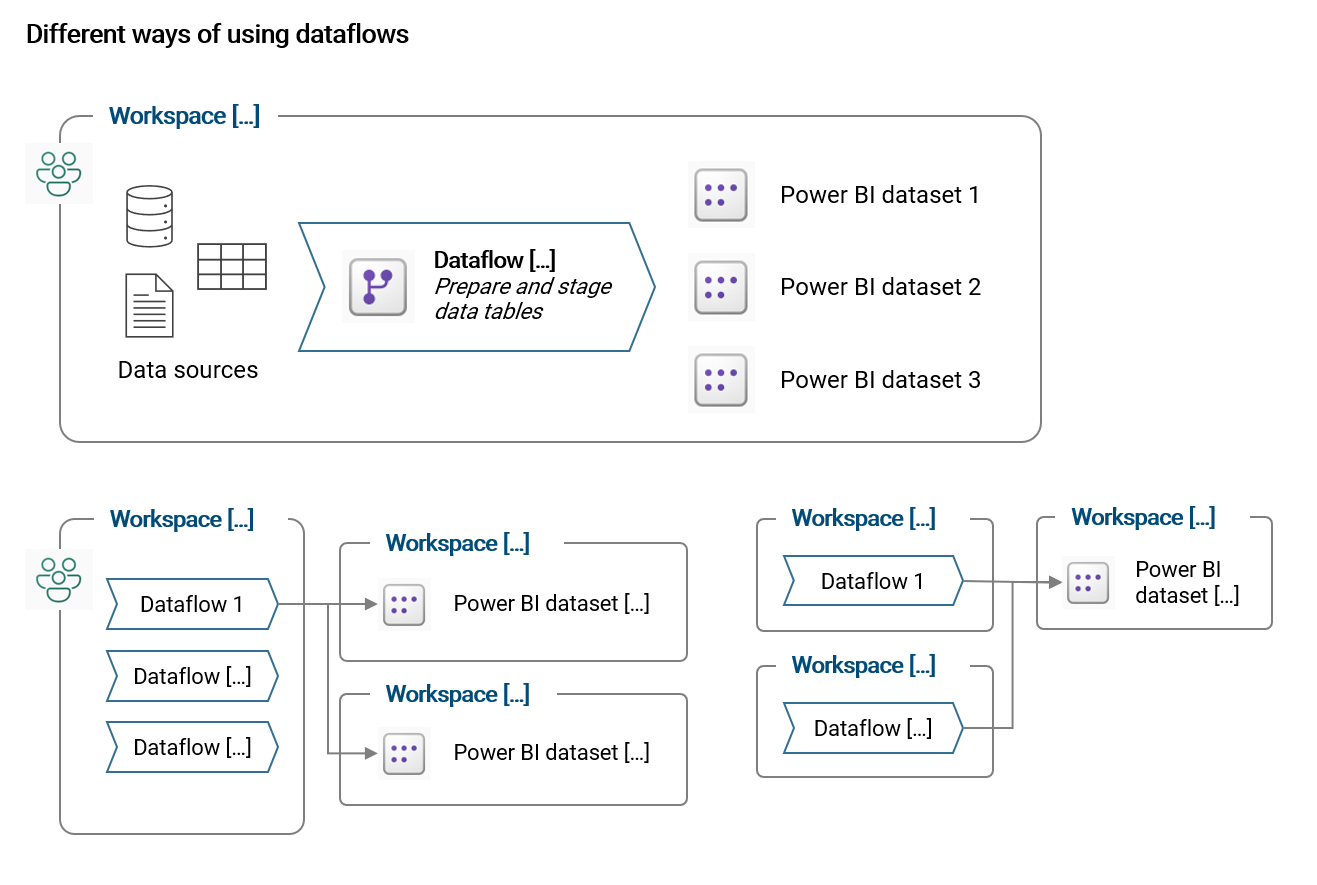

As mentioned, the output tables of a dataflow can be accessed by Power BI datasets. Furthermore, a dataflow can also access another dataflow, which gives us the possibility to chain several flows if required in more complex setups.

Dataflows can be organized in the same workspace as the respective datasets. Alternatively, they can be separated from the datasets into individual workspaces. The illustration below shows different ways of using dataflows:

In general, Dataflows are highly useful tools to scale Power BI within an organization. Because it gives us the possibility to prepare and enable re-usage of important data tables for many Datasets (or business teams).

6.7.2 | Use-cases for dataflows

The most important use-cases for dataflows are the following:

- Staging of data tables: When a data source is slow with responding to queries, it can make sense to use dataflows as an intermediate data storage (i.e. called staging) for large data tables. Typical cases are large transactional tables (e.g. a general ledger from an ERP system). It not only speeds up development work in Power BI Desktop, but also it decreases computational burden on the original data source

- Re-using data tables in multiple Power BI datasets: For solutions with multiple datasets, it is often the case that certain data tables are used in several different datasets. Instead of connecting and preparing the same tables in each individual dataset over and over again, it makes sense to centralize these processes in dataflows and then simply connect with the already prepared tables. This more centralized approach also allows for an easier change management

- Sharing of data tables with power users: For large scale implementations with multiple data teams, it makes sense to re-use centrally prepared data tables by power users. Data tables can be better standardized this way and chances are higher that analytical results are identical between the teams. Please note, control of who can access a dataflow is only possible via workspace roles as of the time of writing this text (see also limitations below)

6.7.3 | How to create, save and run a dataflow

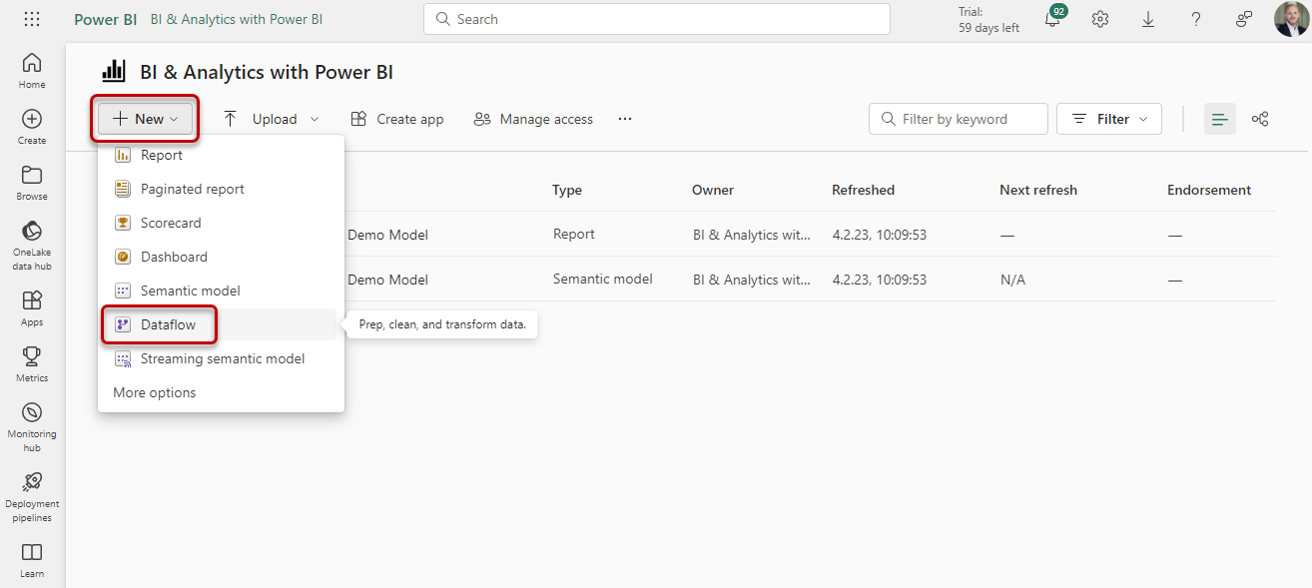

1. Navigate to a workspace in the Power BI Online Service and create a new dataflow

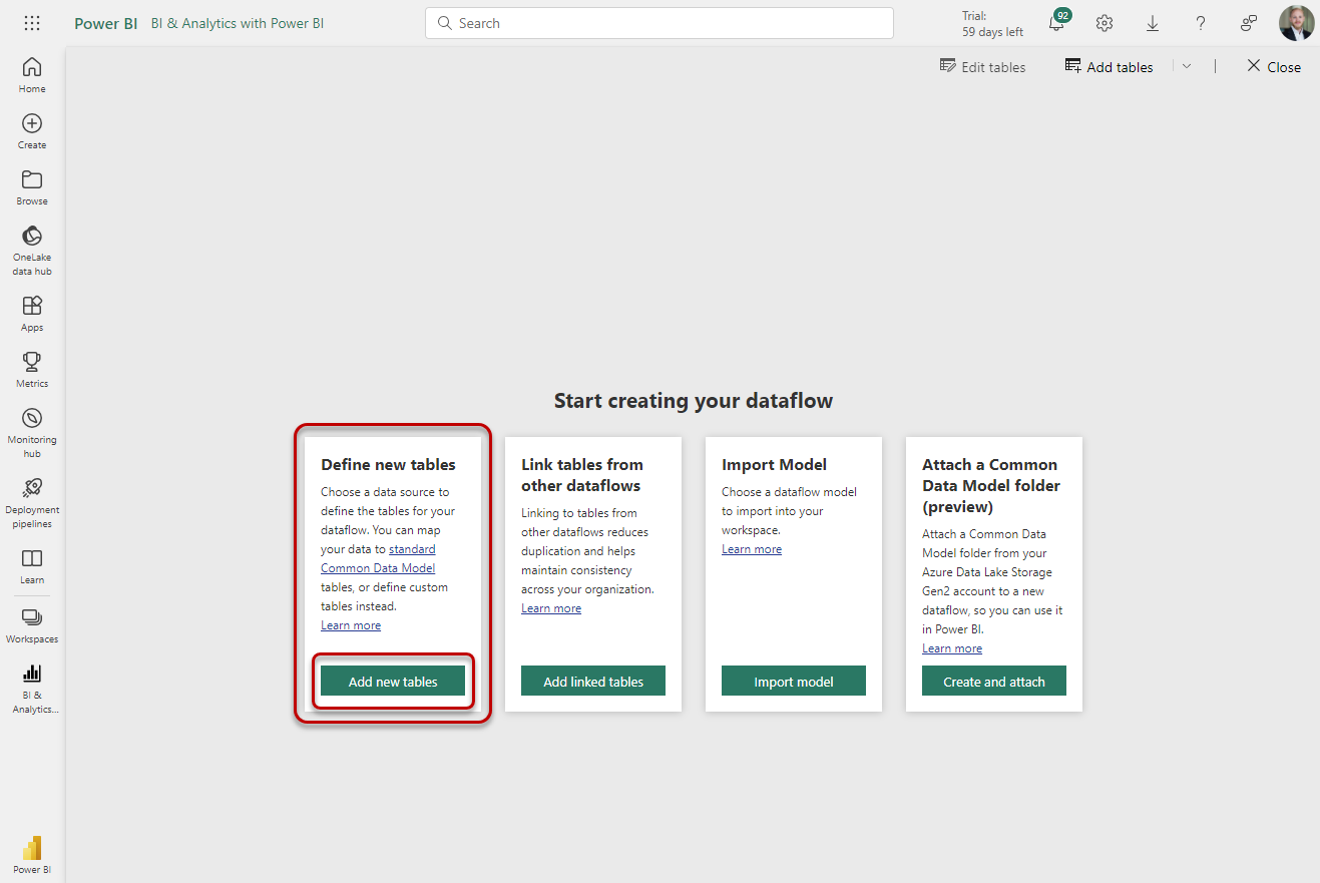

2. Select Add new tables

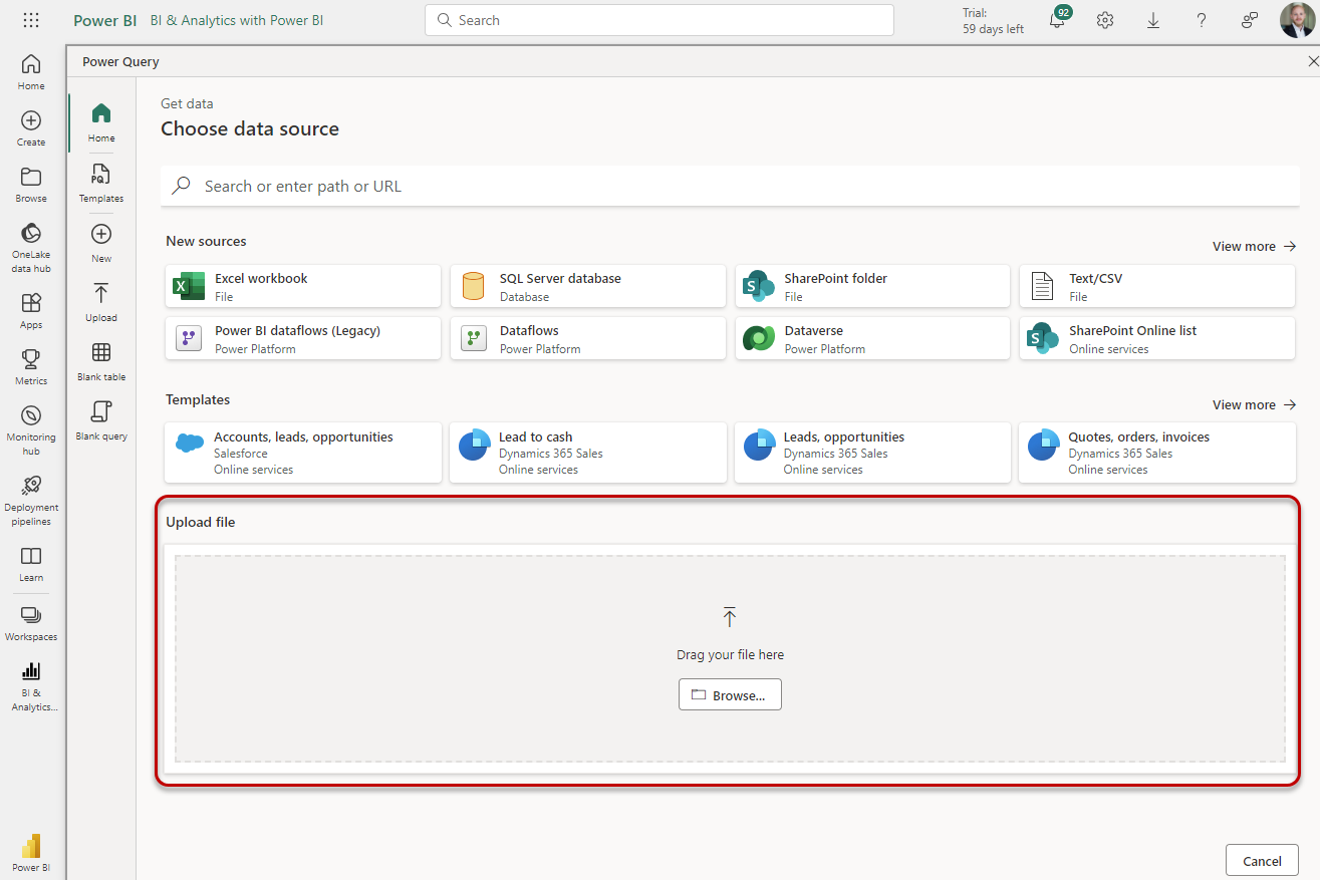

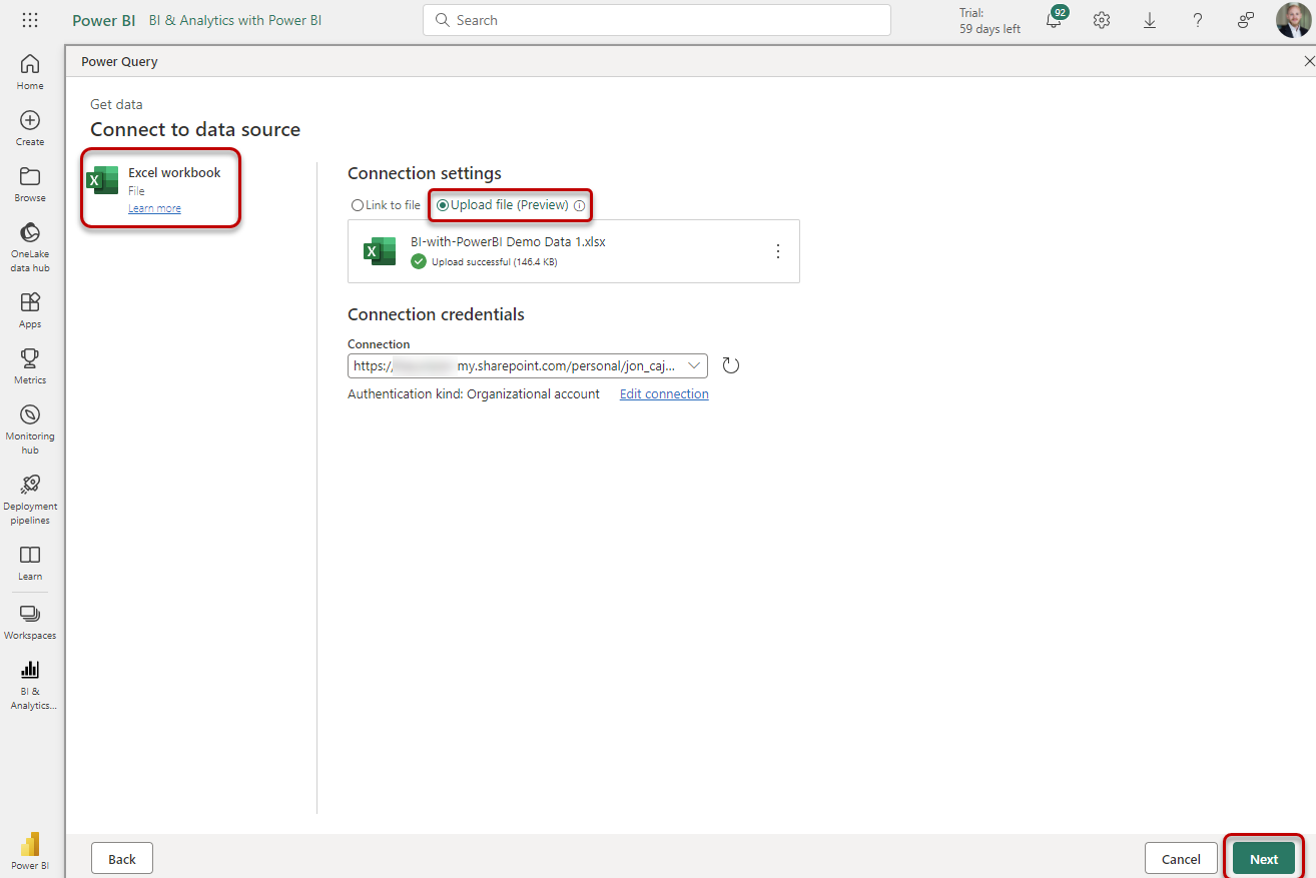

3. Upload the Excel file with the sales demo data

Please note, instead of uploading we could also link directly to the file if it is saved in OneDrive (or Sharepoint).

Of course we can use here all data connectors which are available also in Power BI Desktop. Further, it is also possible to use a Data Gateway here to access on-premise data sources.

4. Confirm to connect to data source

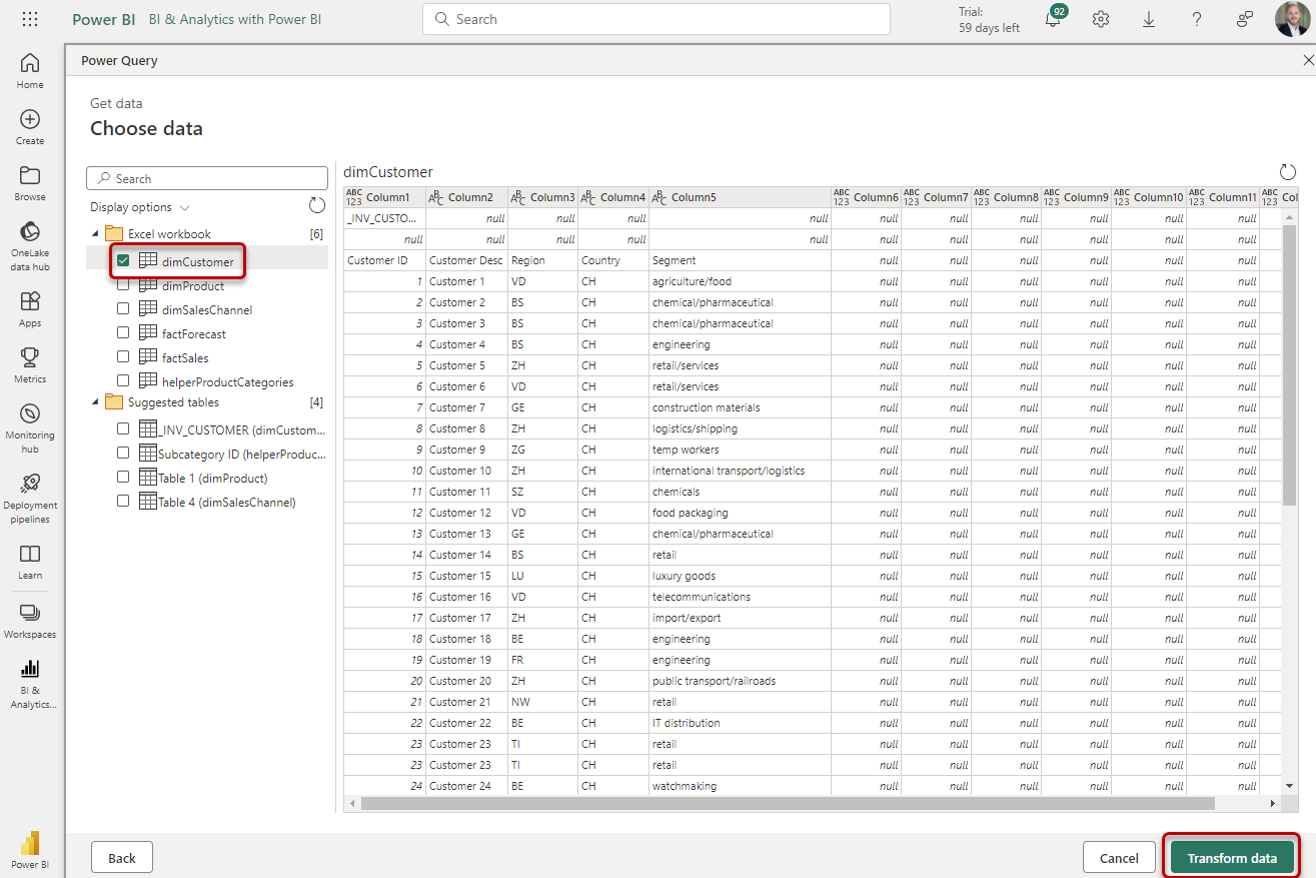

5. Select the tables to be used in the Dataflow

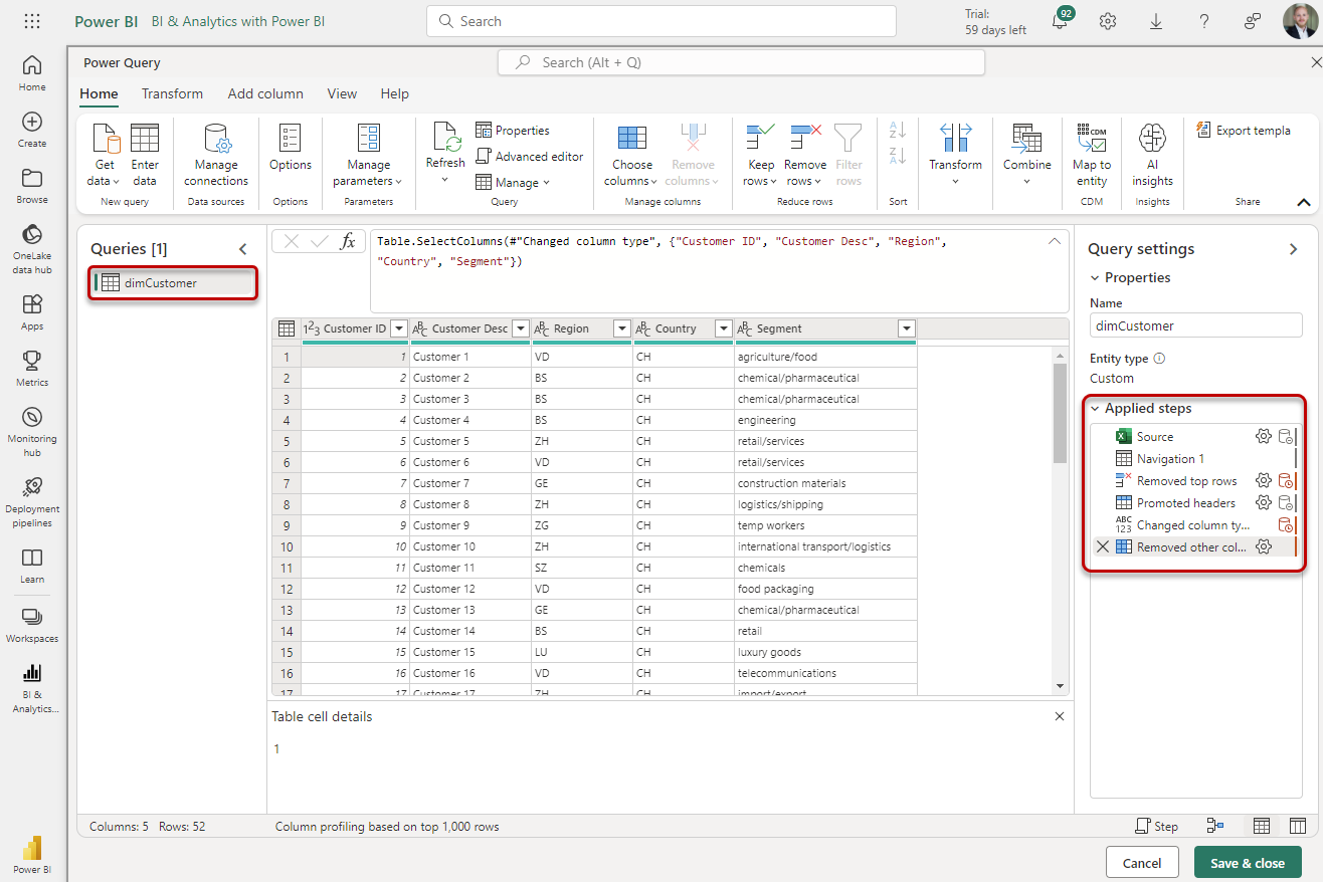

6. Use Power Query to prepare the data and model result data tables

7. Make use of Power Query features only available in Dataflows

Power Query in Dataflows has some extra features compared to Power Query in Power BI Desktop, most importantly:

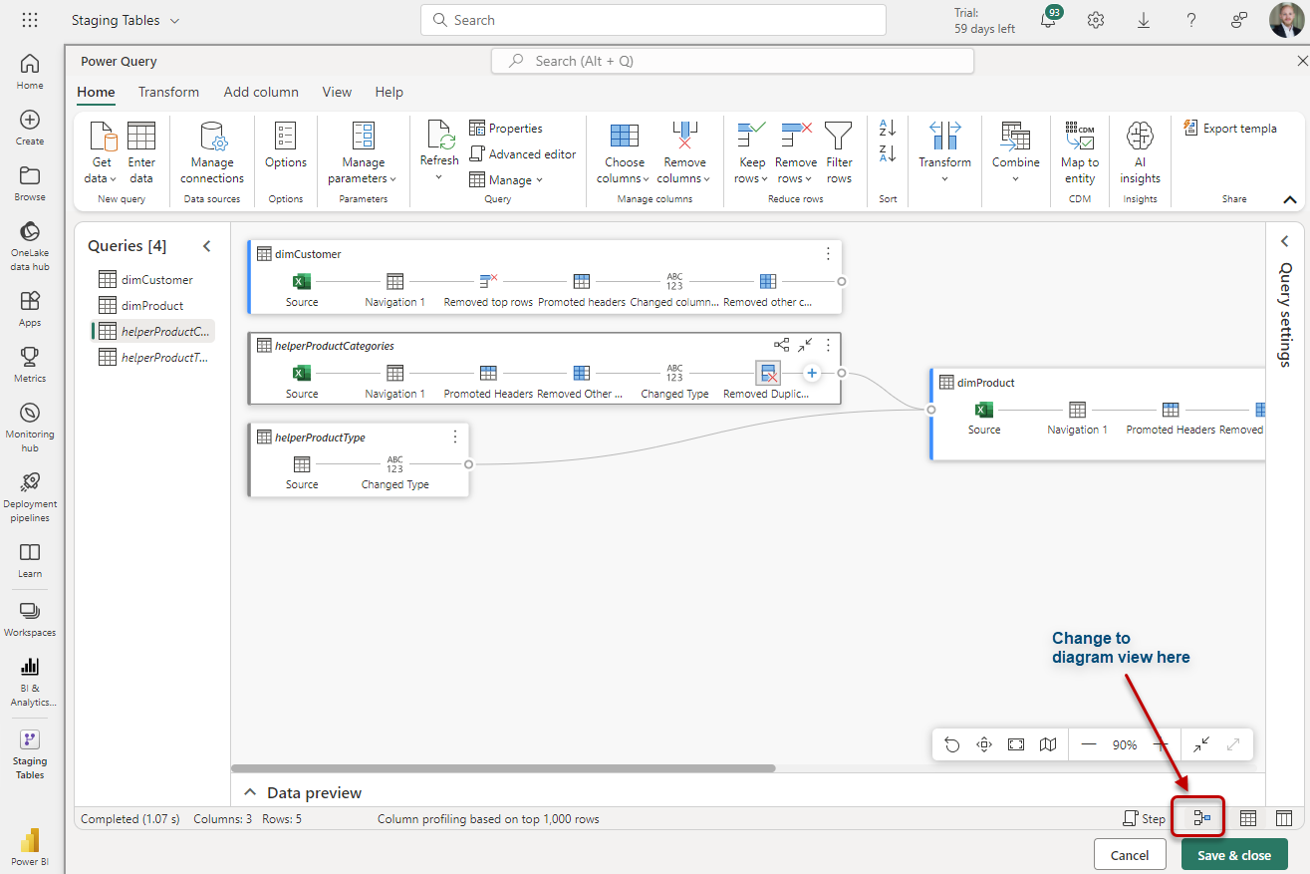

There is a diagram view that helps you to understand the flow of data visually:

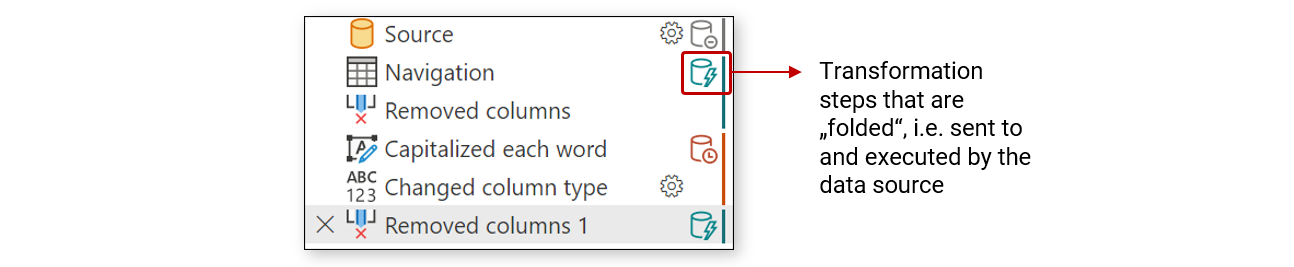

Power Query in a Dataflow tells you which transformation steps are sent to and executed by the data source. This mechanism is called Query Folding and it usually significantly improves the performance of a Power Query ETL process. Please note, only a few data sources support Query Folding, most noteworthy SQL data sources.

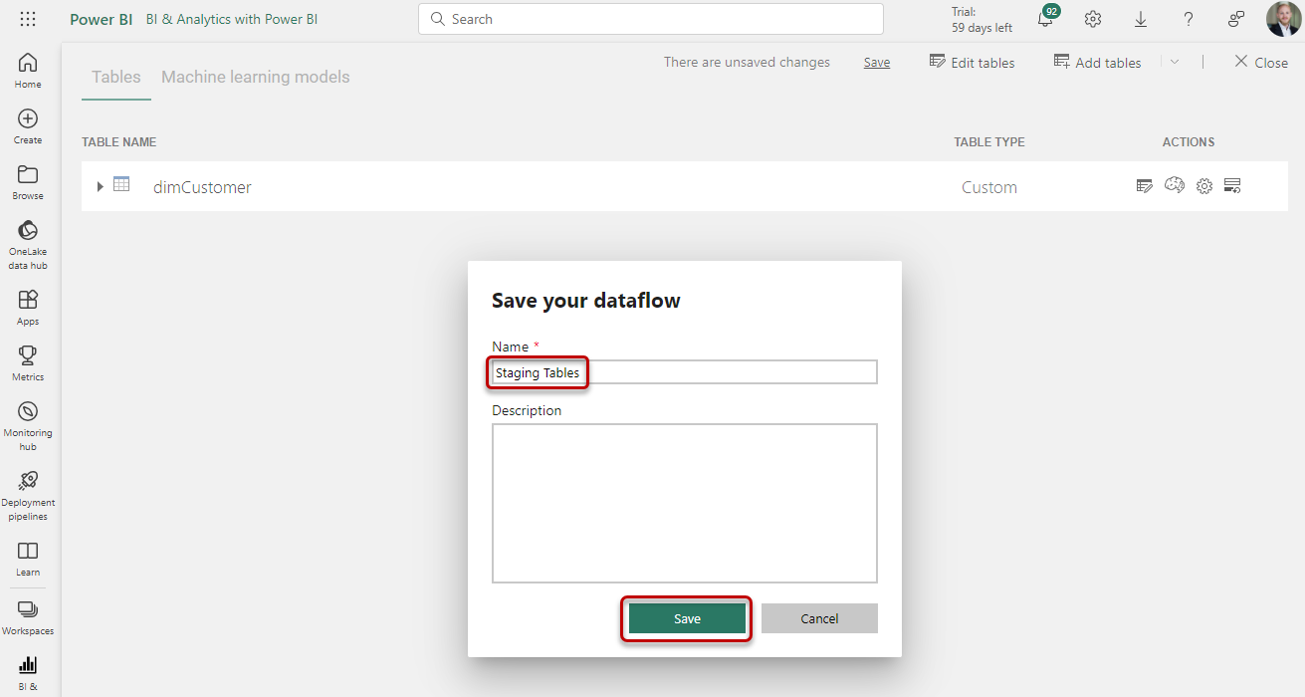

8. Save the Dataflow

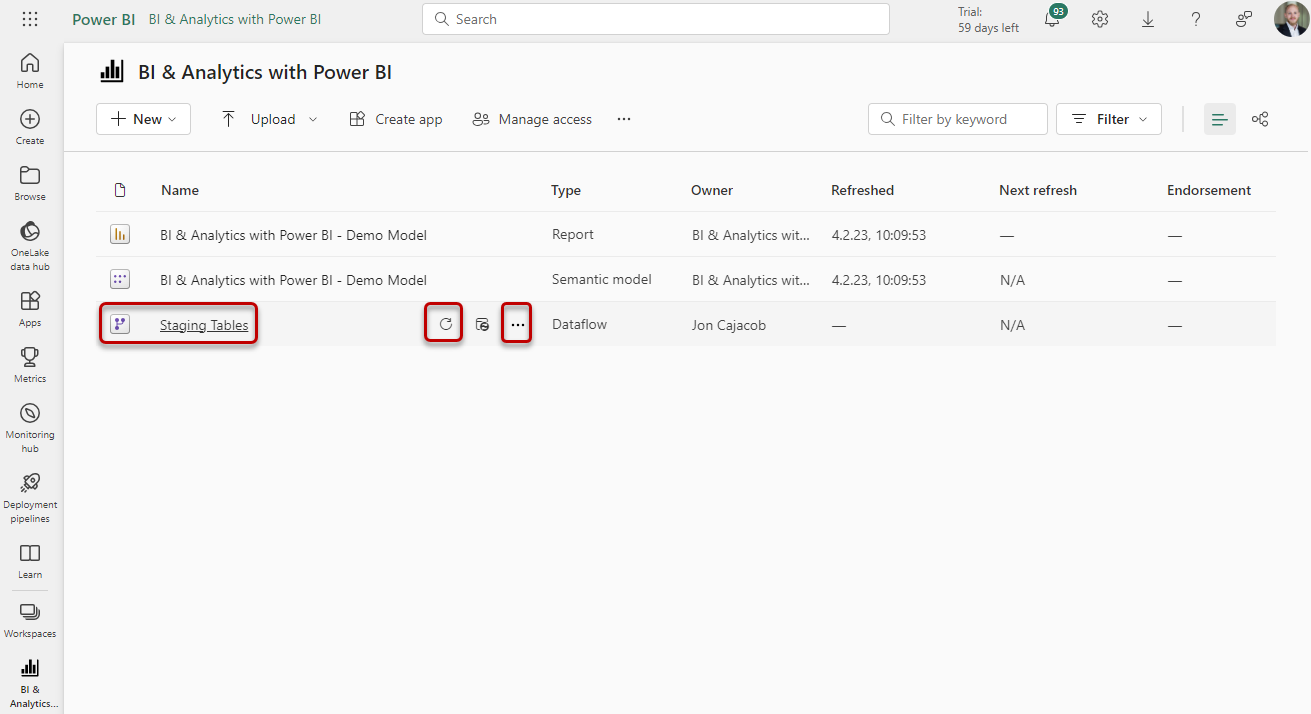

9. Run the Dataflow to load data to tables

Please note, when creating (or changing) a query in the Power Query editor in a Dataflow and then saving the Dataflow, the affected tables are there but empty until a refresh has run and succesfully completed.

As with Datasets, data refresh and scheduling settings can be accessed via the three dots.